I am fortunate to live my life from a very philosophical angle. Most of my pursuits are solitary, and my days are spent in a mind palace where I am free to muse on different topics free of external distractions. This privilege is partly designed, but mostly a matter of happenstance. I am incredibly lucky to have the time and space to spend my days working on a project in the kitchen, twiddling my thumbs in a small balcony garden, or going for runs along a waterfront all while pondering the meaning of life, the universe, and everything.

As of late, my mind has turned firmly to the topic of artificial intelligence. No surprise as the last year of my Master’s will focus on a subset of the field, machine learning. To prime myself, I’m spending the summer reading a combination of philosophy and engineering books that cover the history, theory, and practice of artificial intelligence. I began with a book that has long been on my reading list, Superintelligence by Niklas Bostrom.

My introduction the concerns this book articulates and addresses was through Wait But Why, which covers the concerns around superintelligence (which Bostrom defines as “any intellect that greatly exceeds the cognitive performance of humans in virtually all domains of interest“) in a two part series. The posts, which are fun and contain pretty pictures to distract you from the heavy topic, summarize the concerns of Bostrom and others regarding AI. These concerns are perhaps best summarized by Bostrom himself, who writes:

We have what may be an extremely difficult problem with an unknown time to solve it, on which quite possibly the entire future of humanity depends.

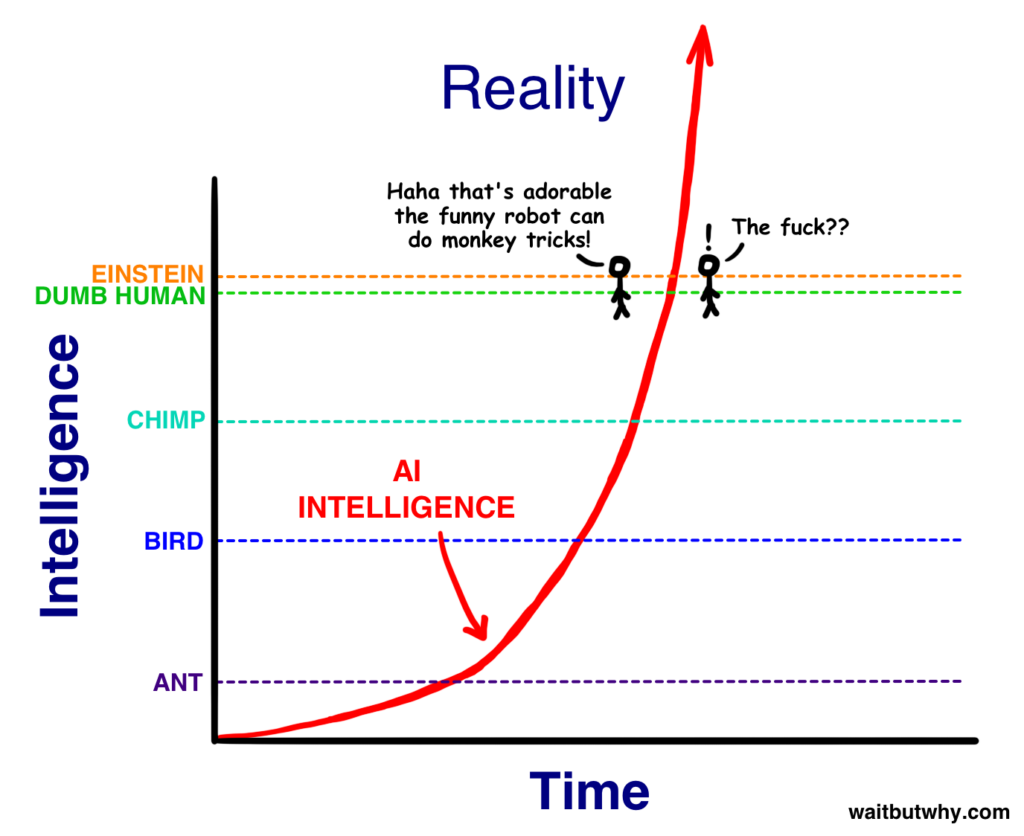

If you’d rather not read the posts, the notion is that intelligence provides great capabilities, but humans operate on a human timescale (slow). Machines are not intelligent, but operate on a machine timescale (way faster!). If machines were endowed with the power of intelligence they would see a rapid improvement of that intelligence (due to its recursive nature where intelligence begets more intelligence) that would exceed that of humans at an uncontrollable rate, putting human existence in peril. This is best illustrated by this chart from Wait But Why:

Bostrom explores this question in greater detail through his book.

Superintelligence takes a somewhat meandering path given how pressing Bostrom positions the issue at hand, but some exposition is necessary for neophytes and the naysayers. Once the concern is established by way of showing the number of paths to superintelligence (through “pure” artificial intelligence, whole brain emulation, human-computer interfaces, cognition enhancements, and/or networks), the forms it may take (all of which illustrate how disadvantaged humanity will be), and the various degrees of system friction in each form (which dictates the degree of speed of superintelligence “takeoff”), we turn to the problems of superintelligence.

These take two major forms. The more corporeal concerns focus on humanity’s inability to work together (leading to many fractured projects in a zero-sum battle to reach superintelligence) and share wealth. The latter is post hoc where the rise of AI leads to one of many possible dystopias as a result of a massive economic upheaval.

The second concern is around artificial intelligence itself, and here is where Bostrom expends most of his energy. It’s hard to articulate these concerns succinctly, so if you have 15 minutes, I highly recommend this video from Sam Harris which was inspired by this book:

While these problems are interesting, what I found more captivating where the questions behind the problems. What are goals? What are motives? What is will? What is control? How do we define these things ourselves so that we can design them effectively in a new intelligence?

Bostrom does not answer these questions directly, nor does he resolve the problems he is raising. The book ends on a call to action that others (like Sam Harris, Elon Musk, Bill Gates, Stuart Russell, etc.) are all pursuing through various projects. It’s a fitting end for a book that begins in a world beyond our current reality. It’s a world that is not without detractors. Many note that there are greater threats to humanity than superintelligence (like a global pandemic, nuclear war, vast social inequalities), and we waste valuable energy by pondering it. Others note that AI is far beyond our grasp; either to such a degree that it may never be reached or we will of course address the problems along the way with the vast amount of time. Harris addresses this concern, as does Allan Dafoe and Russell in this MIT article.

Bostrom himself is not a “traditional” computer scientist, but his degrees in philosophy (B.A., M.A. and Ph.D.), mathematics (B.A.), logic (B.A.), physics (M.A.), artificial intelligence (B.A.), and computational neuroscience (M.Sc.) obviously give him more than enough bonafides to speak on the subject. This is a recurring theme. Many of the artificial intelligence researchers of the past and present have some background in philosophy, drawing from thinkers like Aristotle, Thomas Hobbes, Rene Descartes, John Locke, David Hume, John Stuart Mill, and many others who focused on the distinction between mind and matter, goals and actions, and the source of knowledge and decisional capacity.

The beauty of artificial intelligence is that it exists between a number of sciences. Its roots extend into formal logic systems and notation, mathematically rigorous proofs, probability, control systems, information theory, philosophy, psychology, and even the social sciences like economics and political science. As someone that finds themselves moving between words both professionally and academically, this melting pot of ideas both attractive and comforting, but not without some anxiety as well.